What is computer vision? Where has it come from? 20 years ago only a few esoteric Engineers were working on it, but now mass-market products like the Microsoft Kinect, Google’s driverless cars and facial recognition systems are using computer vision in a fast, cheap and effective way. What happened?

One reason is that computer vision requires a lot of processing, and computers have only recently become fast enough to handle the workload needed. However this is not the only reason why computer vision has gone from an academic dream to a consumer reality.

The main reason is that about 10 years ago Engineers started incorporating machine learning into computer vision and subsequently revolutionized the field. Before machine learning, scholars set themselves incredibly hard mathematical problems in order to get a computer to recognise objects in an image. After machine learning was introduced these tasks became trivial, becoming a matter of simply training a computer to learn what objects look like. This freed academics from the mathematical black hole they were in and allowed them to pursue more interesting problems in the field.

This blog post tells the story of computer vision, explaining why the introduction of machine learning was so significant. It starts by explaining the main objectives of computer vision and why object recognition is a fundamental goal. It goes on to show why this is a complicated problem and how Engineers initially went about tackling it. It ends by describing how machine learning is used to make these previously arduous challenges much quicker and easier.

I specialized in computer vision at university, spending a year studying it. This post is somewhat of an ode to the field, as I have an affinity towards it. Only basic maths knowledge is needed to understand it.

An Engineer’s Problem

The difference between science and engineering is their objectives:

The role of science is to discover the truth about the world. It tries to explain natural phenomena that occurs. If a scientist can correctly predict how natural events happen using the scientific method (reason, logic, maths) they are doing their job.

The role of Engineering is to provide people with what they need and want. It uses the scientific method to create products that do what people want them to do. If an Engineer can produce a product that people want, they are doing their job.

Computer vision is based on an intuitive want, namely ‘I want a computer to be able see images like humans see them”. Therefore it is fundamentally an Engineering problem. If we can satisfy this want we have done our jobs.

While this goal may be an obvious one to pursue, it is not so obvious how to approach it. The first question that arises is ‘what exactly about human vision do we want a computer to do?’. We don’t yet really know what happens when you ‘see’ something. For instance when you see a car coming towards you, how does your brain recognise the car, realise it’s coming towards you, and then process this information to make your body move out of the way? Neuroscience is not (yet) much use here. However if we don’t know how humans process what they’re seeing, how are we going to get a computer to do it?

The way that Engineers have approached this huge task is by breaking it into smaller, more manageable problems. Let’s think about that car again. First, before you can react, you need to recognise your surroundings. You need to identify the car, the road, the pavement and other things. Once you have done that you can start to figure out how fast the car is going, that it’s approaching you and that you should move out of the way. The same is true for almost any situation, before you can figure out whats going on or how to act you need to know what you are looking at. Therefore it makes sense that before computers can start doing anything useful with the images they get, they need to recognise what’s in the image.

Making a computer recognise objects in images, or object recognition, is the first major hurdle to overcome in computer vision. Once this is effective we can start to make a computer try and ‘understand’ the images by seeing how the objects relate to each other, how they’re moving, what actions to take and so on. However while object recognition is only the first hurdle in computer vision, it stumped academics for over 30 years. To understand why it’s worth considering what a monster of a problem object recognition is.

Object Recognition

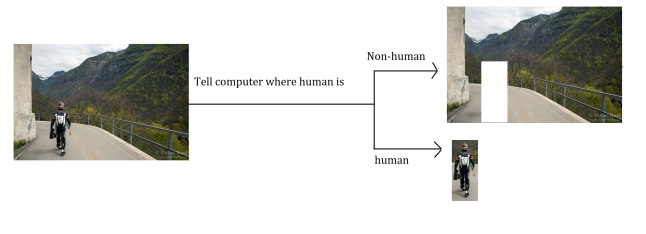

Let’s have a think about what we mean by object recognition. Say we want to get a computer to recognise a human in an image. The computer would be given an image and we want it to decide whether the image has a human in it, and where that human is in the image. Like this:

So how do we go about making this happen? First thing to to is appreciate the environment we are working in with this problem. What data does the computer get? How does this data relate to the real world?

The Data

The data the computer gets is an image. Images are made up of pixels, tiny dots on the screen that can be any color. An image that is 1024 x 768 has 1024 x 768 pixels, or 786,432 pixels. Each one of these pixels is a bit of data that needs to be processed. This sounds like a lot of data, but there’s more…

A pixel actually consists of 3 small LED lights that are red, green and blue. These three LEDs shine at different intensities in order to create the colors on the spectrum. So each pixel has 3 color values associated with it, how intense the red, green and blue LEDs are.

Not only that, but each pixel has a location in the image. This is represented by 2 numbers (x,y) showing where in the image the pixel is.

Therefore there are 5 variables associated with each pixel (2 for location, 3 for color). This means that in a 1024 x 768 image, there are (786,432 x 5 =) 3,932,160 bit of data! This is a huge amount of data to process, even for Engineers. It’s also extremely daunting: within almost a million pixels you need to find the pixels where a human is, and discard the rest.

While the amount of data to deal with is definitely a challenge (especially video, where you get 24 images a second!), it is not the main problem. The main problem is how images relate to the real world.

An image is a way to measure the world. However it is a 2D measurement of a 3D world. Take a look at this:

This is representation of the light that an image collects when a camera takes a picture. X1 X2 X3 and X4 are points in the real world and x1 x2 and x3 are their projections on the image.

As you are probably already aware, a picture is 2D. You can print a picture on a piece of paper. However the world we live in is 3D. Therefore when we take a picture we lose a dimension, namely depth. In the picture above you can see that the photo captures the x and y dimensions, but not the z.

This is a problem for Engineers because when you lose a dimension you lose information about the real world. Information that is hard to get back. This problem is best seen by looking at X1 and X2 in the picture above. In the real world X1 and X2 are far away from each other, however in the photograph they are very close to each other. The reason for this is because X1 and X2 are far away from each other along the z axis, depth, which the image can’t capture. Therefore they look close to each other in the image even though they’re far away.

Another problem with photos only capturing 2 dimensions of a 3D world is occlusion, or objects blocking one another. In the photo above you can see that X3 and X4 are both being projected onto the same point. Because X3 is nearer to the camera, it will block X4 so that it will not be visible in the photograph.

So we need to process a huge amount of data that contains incomplete information about the real world. This is not pleasing. In general we like to deal with a small amount of data that accurately measures the world. Therefore before we even approach object recognition we know we have our work cut out for us.

The Method

Let’s return to the problem at hand, object recognition. Say that instead of a human, we want a computer to recognise something much simpler in an image. An orange

The method that gets a computer to do object recognition is called template matching. Template matching uses a ‘template’, or model, of the object we want to find (in probability parlance this is called a prior), and then scans an image to see if any part of it is similar to the template. In the picture below you can see our template of what an orange looks like (an orange circle), and which part of the image looks most like our template after scanning it:

The technical name for scanning the image with a template is called cross correlation. Cross correlation compares two sets of data and returns a measure of how similar they are. If the two sets of data are the same the cross correlation is 1, if they are completely different the cross correlation is 0.

To visualise scanning the image, imagine that we are moving the circle around the whole image and looking at the data inside it. If the cross correlation with the template is high, the computer then thinks an orange is there. If it’s low then the computer ignores it.

So after we have a template we can just scan an image and make a rule like ‘if the cross correlation with our template is above 0.8, then it’s an orange’. If no part of the image has a cross correlation above 0.8 then the we simply say that there isn’t an orange in the image.

That’s the basics of template matching. Seems pretty simple right? Make a model of something and see if any part of the image looks like it. How did researchers hit a brick wall if that’s all there is to it?

Templates – the crux of the problem

When we make a template of an object, it needs to be a representation of what an object will look like in a picture. It therefore needs to be in 2D, because we are comparing it to a 2D picture.

However this leads to significant problems because objects in the real world are 3D, and creating 2D representations of them is tricky business.

The example of the orange hid this nicely, because an orange is unique in how simple the template is to make. Think about it: any way you look at an orange (and in almost any lighting) it will appear in a 2D picture as a orange circle. For every object we want to detect that isn’t an orange, the problem of making these 2D templates becomes very hard very fast.

For instance lets think about how to make a template of a cube. When a photograph of a cube is taken, the cube can be in any orientation. Take a look at the gif below of some of the ways a cube could appear in a photo (borrowed from this website)

As you can see, there are many different ways that a cube can appear (imagine it rotating up and down as well as left and right). Yet if we want to find any cube that appears in an image, we need to make a template of each orientation. This is because if we had templates of only a few orientations, when we template match we will only be able to find a cube in those specific orientations. In order to detect a cube at any orientation, we need a template of every way a cube can appear in an image.

Let’s say we had 100 templates of the different ways a cube can look in an image. To detect a cube, we would then have to do template detection on each of these 100 templates, and if at any point in the image any of the templates had a high cross correlation the computer would detect a cube.

Hopefully you can start to see how templates became a big issue for researchers. Objects like humans are much more complex than cubes, and if we want a computer to detect them we need templates that can account for all the ways you can position your limbs, all the angles the photo can be taken from, as well as accounting for all the different shapes, sizes and skin tones of people. You need thousands of templates to detect a human, and quite a bit of imagination!

Initial Attempts

Now that we have seen how making templates is at the heart of object recognition and that they are difficult to create, we can start to see the brick wall that researchers hit. Hundreds of papers have been dedicated to creating good templates, but a large proportion of them could not recognise objects reliably. This is because if the templates were anything less than perfect, template matching would not work properly.

Bad templates can lead to two problems. First of all if your templates don’t accurately represent the object you want to find, it is likely that the computer won’t find that object in an image, even when it’s there. If this problem occurs we know that we should try and create a template that more accurately represents the object at hand. The second problem is that your template might produce ‘false positives’ – or think that it has found an object in an image even when it isn’t there. If this happens then we probably need to simplify the template so that it doesn’t think random bits of an image are objects.

Making templates is therefore a balancing act – you want to be able to encompass all the unique features of an object, but you don’t want to be over-accurate or else you’ll get false positives. A quick example to highlight what we mean by over-accurate: say you want to make templates of humans, but all your test subjects happened to be wearing red t-shirts. If you incorporated a red t-shirt into your template of a human the computer will start looking for red in an image, and if it finds any red it’ll think that a human is likely to be there. However this isn’t the case, humans can wear any color t-shirt. Using red in your template is being too accurate on your test subjects – giving the computer an excuse to find humans in an image where there aren’t any.

Researchers initially tried to make templates by making mathematical models of objects. For example when trying to model humans, researchers relied on models of humans as stick men. The picture below is a random selection of how humans have been modeled in various papers.

While these representations may seem a bit simple and silly, they make sense. These templates can’t be too complex (or else they get false positives) but need to capture all the features of humans that make them appear uniquely ‘human’. Therefore making stick men to use as templates isn’t a bad place to start, as most people have the same body shape as a stick man. However this isn’t good enough to recognise humans.

The reason why is because mathematical modelling always makes assumptions about the world. Assumptions that aren’t necessarily true in the case of computer vision. For instance when using stick men as templates we are assuming that humans have the same rough shape as a stick man and will always appear in an image as one. This isn’t true in real life. People vary hugely in weight, some appearing more circular than straight, which the stick man doesn’t account for. Also people wear clothes that can conceal their body shape, for instance women wearing hijab. The stick man template will not detect these people in images because it assumes certain things about the way humans look a priori which are not universally true. When making a model, the assumptions made inevitably includes/excludes features that shouldn’t be there.

Another problem with the template of a stickman is that there are many different ways that a stickman can contort itself, some contortions being more likely to occur than others. For instance look at these two different stickman poses:

The template of the stickman on the left is likely to occur in images, as people pose like that all the time. Therefore it is a good template to use when searching for humans. The template on the right is extremely unlikely to occur in images, as it is almost impossible to position your body in this way. Therefore this isn’t a useful template to use when searching for humans. If we were to include the bad template the computer would detect a human where there isn’t one – for instance some branches of a tree may look similar to the bad template, so the computer would identify it as a human.

So even if our stickman model of a human was really accurate, we would still need to define the likely and unlikely poses that it would take. This is a very hard task – how do you define all the likely poses a human could be in? Researchers again tried to put into maths likely human poses. But this is a long, hard and fruitless task. You can’t model likely human poses without making dodgy assumptions that ultimately lead to bad object recognition. For instance one research paper modeled limbs as ‘springs’ so the more you bent your limbs the more unlikely the pose was. This is a bad assumption because it is patently not true in real life – bent arms is not an unusual pose. However this kind of mathematical modelling was the norm in object recognition for quite some time.

The challenge Engineers set themselves was therefore huge. If you wanted to get a computer to recognise something you had to mathematically define what the object looks like at every angle and how it would appear in the real world. This put research in a rut. Papers that claimed to recognise objects accurately only did so in very specific conditions (e.g. some papers could detect humans only if the background was completely black), While other papers filled pages with equations of how objects could appear that ultimately didn’t lead anywhere – being either too simple (false negatives) or too complex (false positives).

Then machine learning was used to make templates and changed the approach completely.

Machine Learning

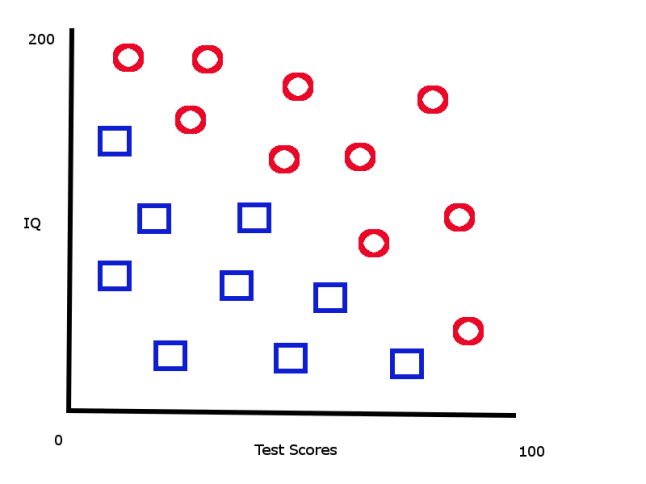

Machine learning is a field of Information Engineering that developed completely separately from computer vision. Put simply, machine learning is a way of classifying new data based on old data. Take a look at the picture below (from a previous blog post on machine learning)

Here is a graph of various student’s IQs and test scores. They are classified as going on to earn either above or below 30k a year – the red circles being above 30k, the blue squares being below 30k. This is our ‘old’ data, data we have already collected about students. When we get a new student’s test score and IQ, we can then look at this graph and predict whether they will earn above 30k or not. The student can be classified as above/below 30k because we already have data on past students.

Machine learning makes a computer automatically do this classification rather than a human. Let’s say instead of IQ and test score, we had 10 variables on each student like weight, gender, family income etc. as well as whether they earn above or below 30k. When we get a new student’s information, we cannot simply look at a graph and classify them as above/below 30k, because you can’t plot a graph with 10 dimensions. However if we give a computer enough information on past students, it will be able to take the new student’s information and classify them as above/below 30k. The computer can do this even if we have loads of variables about each student.

Machine learning is useful because after you have ‘trained’ a computer by giving it lots of old data, it will start classifying new data by itself. The computer will automatically look for old data that is similar to the new data, and classify it accordingly (e.g. in the picture above, if the new student has a high IQ and test score, the computer will see that other students with high IQ and test scores earn above 30k, so the new student will be classified as above 30k). Once you have trained the computer, you can sit back and relax while it classifies your new data. The computer program that does this classification is called (unsurprisingly) a classifier.

Classifiers are also fast. Once a computer is trained it can classify new data in a very efficient manner.

So what does machine learning and classification have to do with making templates? This is where the truly elegant insight comes in. If you think about it, when we are trying to recognise a human in an image we are classifying the pixels as either human/non-human. Our template of a human is essentially a classifier, telling the computer ‘here is what a human looks like in an image, everything that doesn’t look like this isn’t a human’. Humans are red circles, non-humans are blue squares.

When researchers were making templates with mathematical models, they were making classifiers based purely on their thought an not on past data. They were relying on their insight to draw lines of distinction in a dizzying set of over 3 million data points. As we have seen this was a profound task that would make even the most confident Engineer whince. What they didn’t realise is instead of conjuring up in their minds what a human looks like, machine learning could learn from past images what a human looks like, and automatically do this process of classification for them.

Here’s how it works. You collect a load of images with people in them. This is your old data to train the classifier. You then mark out in each image where the person is and then give this to the computer. This is your way of classifying pixels in an image as human or non-human, red circles or blue squares. Like the picture below:

Once you have fed thousands of pictures that have been classified as human/non-human, the classifier will learn which sets of pixels in an image are likely to contain a human in them and which are not. This means that our trained classifier will be able to take a new picture, look at all the past pictures it has which are similar to it, and determine whether or not there is a human in it.

There are three huge benefits in doing this:

– You can give the computer pictures of people in different clothing, lighting, shapes, sizes, skin tones and so on. The computer will then determine what attributes are common across all humans and which are not (like red shirts aren’t important but general shape is). It is able to pick out subtleties that distinguish a human which an Engineer can’t.

– It drastically decreases the workload for the Engineer. Now making templates is simply a matter of giving a computer images that have been classified, which is so much easier than the previous task of modelling.

– It’s fast. Previously when you had hundreds of templates for something (remember the cube) you had to scan each template over each part of the image to see if you had a match. This takes a long time, even on modern computers. With machine learning you can find an object much quicker, as the computer just needs to see if the picture has any of the attributes of a human that it has already figured out.

I love this. It is a completely different way to approach object recognition and it has provided breathtaking results. To me this kind of insight is a mark of true genius as it makes a previously unsolvable problem trivial by incorporating a completely separate field.

From research to product

As you may have guessed from my examples, I specialised in human recognition. In this area the ultimate goal has been to get a ‘real-time’ 3D pose of a person from video i.e. when I dance infront of a camera I want a 3D stickman on screen copying my movements at the same time.

It was a few years after machine learning started being incorporated into human recognition that this goal started to be realised. Researchers had attempted to train classifiers with pictures of people but they weren’t very good. The reason why is quite interesting.

A classifier that can find humans needs to train on a huge amount of images. This is because humans are very complex objects, mainly due to the fact that we can position our limbs in different ways (think back to the stick men). So for a classifier to learn all the different ways a human can appear, it needs thousands and thousands of images to learn from, or else it may not recognise a certain pose. The reason that machine learning didn’t work for researchers initially is that they didn’t have the time or man power to train a classifier with so many images. The upfront grunt work was simply too much to handle in an academic setting.

Because of this, it was actually Microsoft that came out with the first system that is widely regarded as meeting this ultimate goal of human recognition: the Microsoft Kinect. Because Microsoft stood to profit from a good human classifier, they were willing to put in the money and time required to train one up. If I recall correctly the Kinect has been classified with over 100,000 images. This has led to a system which can track you in real time, allowing you to use your movements to control a game.

But the Kinect isn’t the end of it. Classifiers will come out that are trained on more images and are more accurate. And classifiers are yet to be trained on many other objects that would be useful to recognise. Thanks to machine learning computer vision has finally taken off the ground and is starting to realise the goal of making computer see images like humans do.

Wow, very interesting article. I like the way you can show the big picture. However reading the full story it appears quite obvious, that you should leave the great work for the computers. How come machine learning became only now so popular?

Two reasons I think. First of all machine learning is a relatively new field. It’s only been around for maybe 30 years. So many Engineers didn’t know much about it until quite recently. Second this kind of solution seems obvious in retrospect, but at the time it didn’t. Kind of like how the ideas behind uber or facebook seem so simple in retrospect, making you think ‘how did no one think of this before?’, but before they were around they weren’t obvious at all.

Nice article. I interested also in computer vision. Unfortunately in my University I have to wait until next semester to have a chance to learn it in class. Now I only read some CV book and learn CV with OpenCV library in Python

thanks for lovely article

————————

used computer

Woah! I’m genuinely savoring your template/theme of this web page. It’s uncomplicated, but powerful. Frequently it’s quite difficult for getting that “perfect balance” concerning exceptional simplicity along with overall look. I need to state you’ve completed a great task with this particular.